Jeni Paay

Research Projects

My current research aims to advance design of human-technology interactions focussed on assistive technologies, health care worker education, digital place-making, human-AI interaction, user experience for VR, smart and sustainable cities and homes, utilising design practices for community engagement and participation for exploring design solutions.

AI as the Most Valuable Player

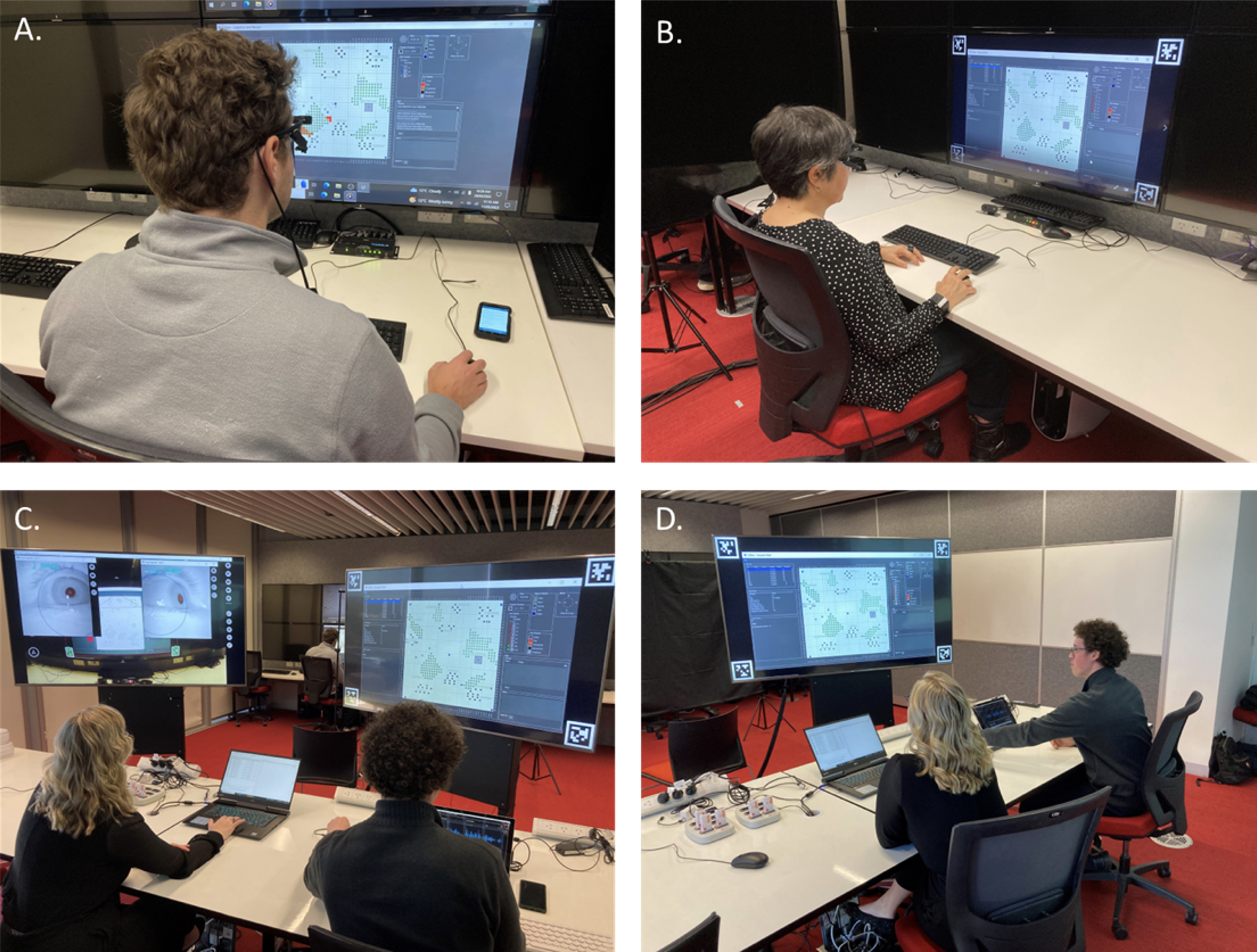

Human performance in time-critical decision making is influenced by a combination of mental workload, stress, situational awareness and expertise. This project is designed to investigate what role can Artificial Intelligence (AI) play as: a monitor of individual decision-making performance; and a team-member in a Human-AI team where the AI is the MVP. The research uses measures of biometrics, eye-tracking, passive sensing, interaction analytics, self-reporting and interviews. This was an interdisciplinary project involving astronomy, aviation science, sports science, psychology, computer science and HCI. The research was funded by Australian Government Defence, Next Generation Technologies Fund, DAIRNet

VR for Learning

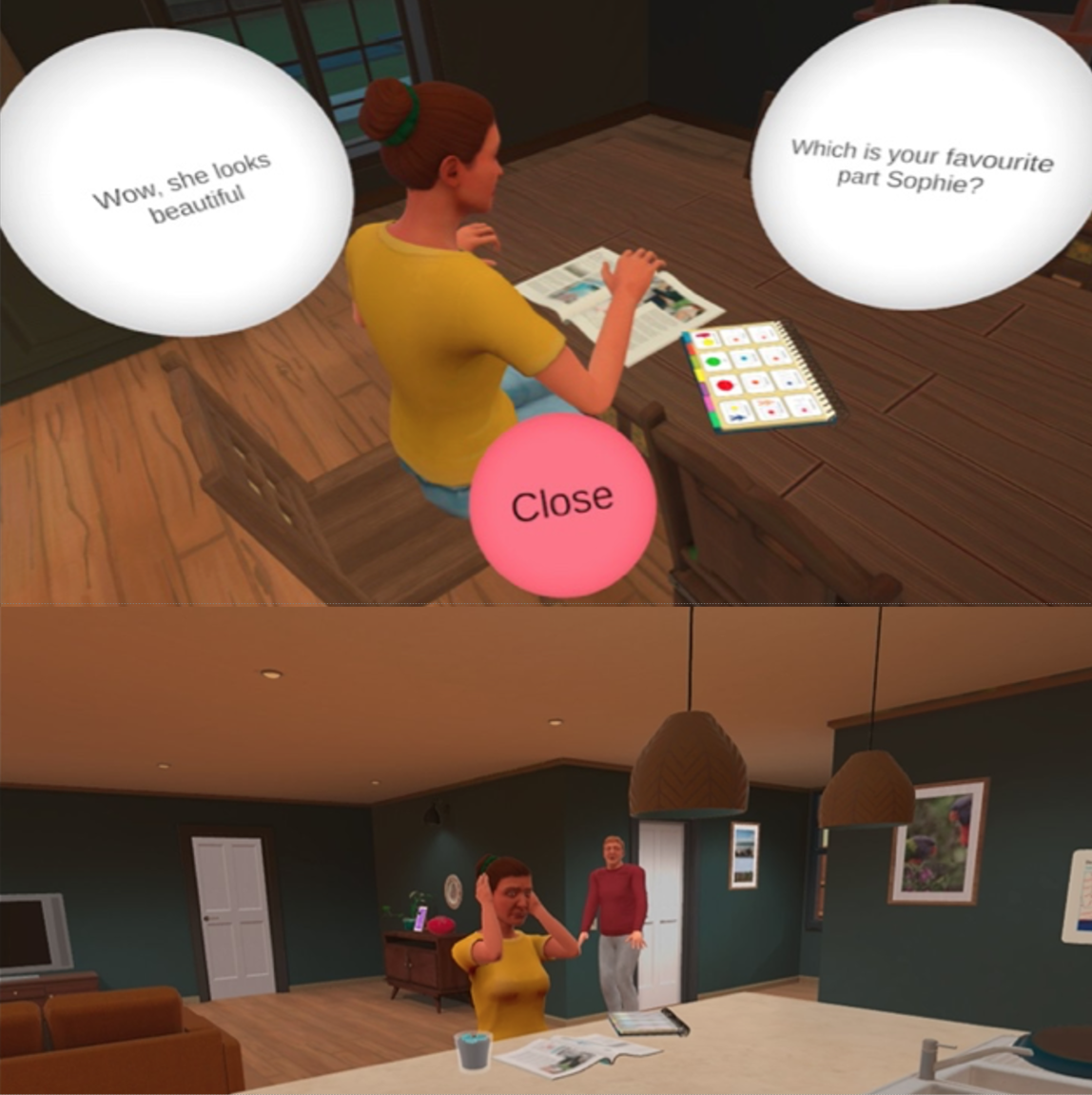

Virtual Reality (VR) as an environment to simulate situations can be used for workplace learning, especially in situations where there is a safety concern for the learner. The Safety at Work project works with SCOPE and Swinburne PAVE to look at the safe training of Disability Support Workers, through the simulation of home situations with clients, where the learner must make the right choices to help the client in moments of stress. Five VR scenarios were developed to deliver key learning outcomes of Positive Behaviour Support training to VET students in courses incorporating Disability Support content, and as professional development to Disability Support Workers (DSW) working in the field. Key outcomes were: VR scenarios provided a highly engaging and effective training experience, for both novice and expert groups; VR training embedded within face-to-face learning is critical to succes; and remote delivery of VR is feasible.

In the Safe and Successful Places project we are using VR to simulate different street conditions for people to allow people to evaluate their experience of different conditions with respect to how they provide feelings of safety and enjoyment, to design successful streetscapes in future planning for cities. This project is a transdisciplinary investigation under the Future Spaces for Living and Future Mobility programs of the Smart Cities Research Institute and supported by Transport New South Wales and iMOVE.This project developed and tested a prototype streetscape design assessment system using immersive virtual environments (IVE), to gain insights into citizen perceptions of design elements and safe system treatments. The results showed that vast improvements to the experiential qualities and pedestrian perceptions of main streets can be achieved through relatively small, inexpensive design changes such as reducing traffic noise and speed, increasing pedestrian separation from traffic, providing safe cycling, pedestrian prioritised crossing conditions and increasing tree canopy cover.

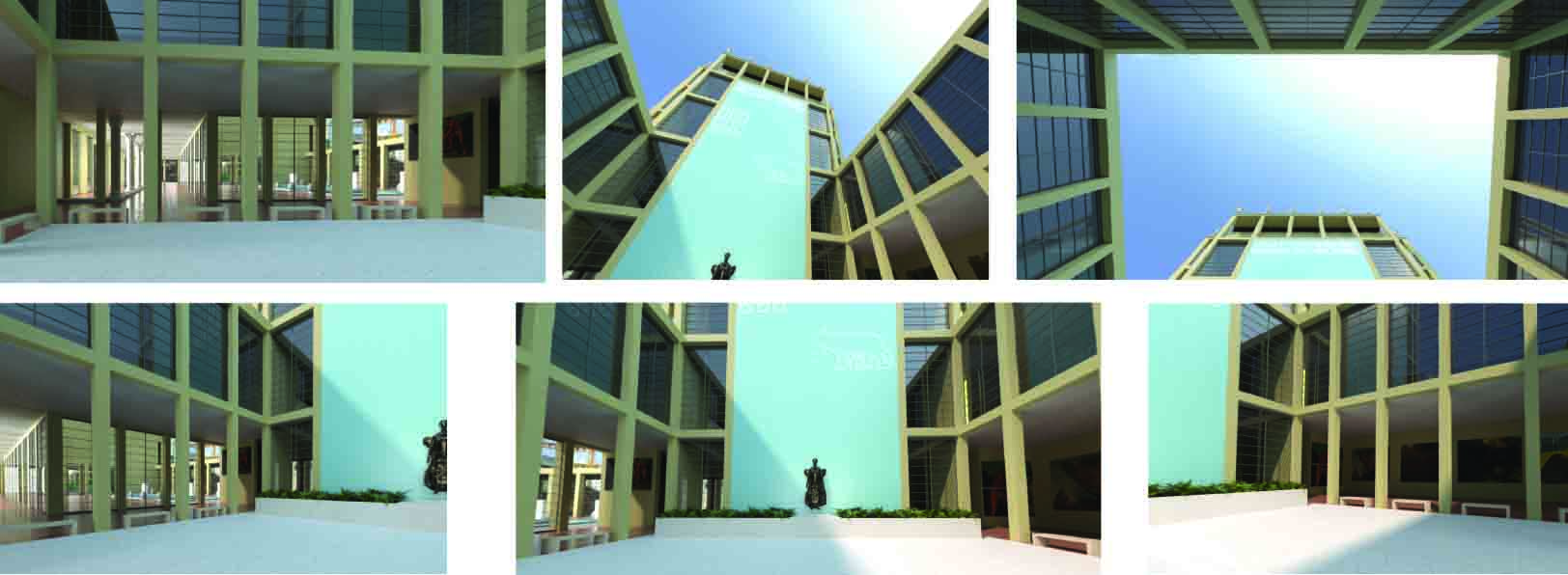

Virtual Reality (VR) can also be used to create experiences of past innovations in design, such as the architectural explorations of new materials and technologies that took place at the World Expositions. These temporary, and yet highly influential, constructions seldom survive beyond the life of the expo. Some are transported to new locations and reconstructed, while others are lost forever to human embodied experience. VR can be used to reconstruct and give students and scholars a virtual experience of walking through these designs, inspiring future design thus contributing to design and planning of innovative aspects of future cities. The Learning from Lost Architecture project has reconstructed the Italian Pavilion from the 1937 Paris Expo, and will continue to add to this experience with other structures that can be experienced through VR.

Future Spaces For Living & Working

Understanding people doing activities in their private settings, such as their homes , and how they conduct cooperative and interpersonal activities with others, such as cooking together, or how they interact with digital personal assistants can be difficult to study in-situ. There have been many different solutions to this problem. We propose an effective way of accessing this information, Digital Ethnography, which specifically looks at YouTube videos to gain access to people’s homes and private moments and activities that they share with others. In the Cooking Together project, we adapted research and annotation methods from sociology for mapping people’s movements in a space to analyse the formations people make when they cook together. We then classified these formations with respect to different cooking activities that took place. This work provides a foundational understanding of domestic interactions, supporting the design of technological interventions for homes. This included work that focused on supporting intergenerational relationships over a distance, including through the act of cooking together.

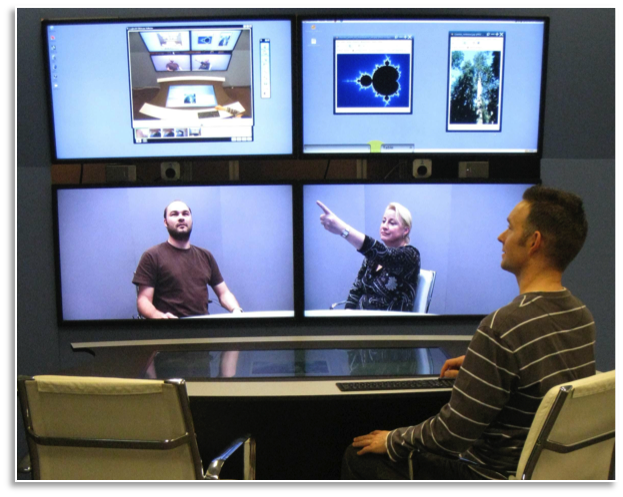

Being able to work with others in a face-to-face situation allows people to negotiate and relate to each other in ways that are beyond spoken words, voice inflections and facial expressions. Adding shared documents into this workspace allow people to point to and move shared objects and information while working remotely with others. Our blended spaces project created a virtual shared space where people can work with documents over a video conference system, while at the same time being able to interact and relate to each other using natural modes such as eye contact and gestures, that one would use at a similar meeting held in the same physical space. Through careful design of physical space and interactive software objects, we created a unique environment where people who were remotely located could feel like they were talking and passing around documents as if they were co-located.

Contextual Computing

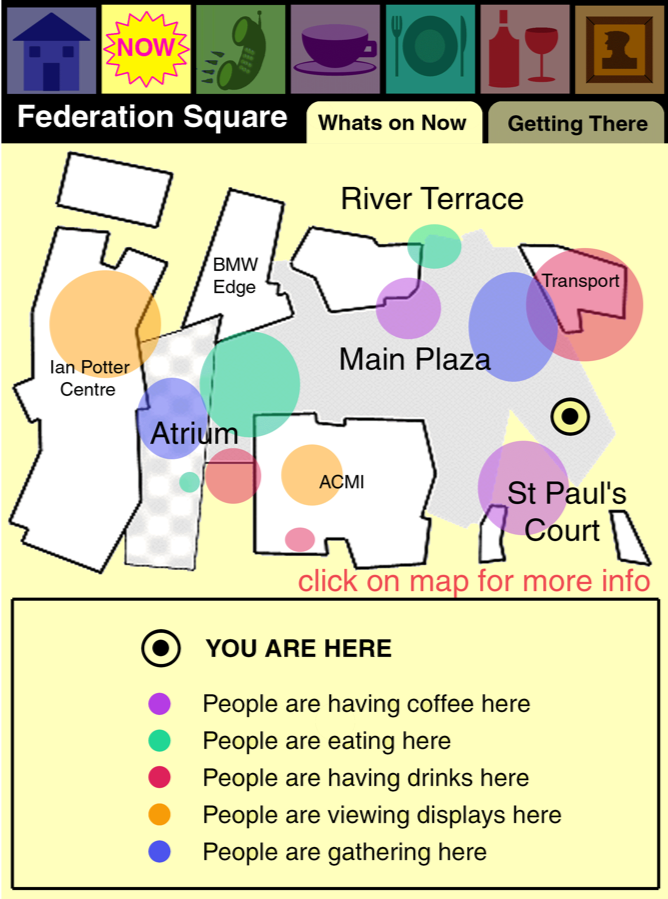

Adding a layer of digital information to urban built environments gives people access to information that might otherwise not be directly visible, in this way we are "making the invisible, visible" providing information and control over spaces and buildings in our environment. Within this, my work in Human-Building Interaction looks at the design and use of computer technologies linked to the built environment, focussing not only on the interface between people and computers, but on the interaction between people and the environment, for example, opportunities for augmenting public spaces with technology. Smart homes, smart buildings and smart technologies pervade the places we live in, and the built environment hosts an abundance of sensors and actuators, both inside and outside buildings, which can both change the characteristics of that environment and give us the ability to know more about the environment. This opens up opportunities to simulate, control and modify spaces and people’s behaviours within the built environment to be more sustainable, more productive and healthier. For example, digital twins of the built environment can be used to improve health outcomes for inhabitants or reduce energy consumption..

Augmented reality makes it possible to superimpose a layer of information over the world. Using the camera facility provided by smartphones we have made early explorations into how to augment the world with either facts or fictional content. Using the phone screen as the view-finder we have introduced the use of AR into several different contexts of use. Examples include, using AR on a mobile phone for knowing more about the city around you, for weaving fictional stories around familiar places that you travel to, for siting your new home on your block of land and creating redesigns in a contextual architecture mode, and for putting a layer of transport information on the world, such as how far away the next bus is from your current location.

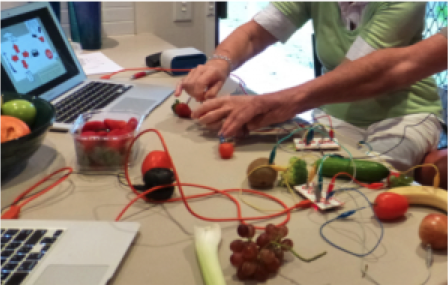

Health behavior change is a relatively new research area for technological intervention. Persuasive technology design and positive computing are both areas of interest for people looking into technology solutions for helping people to change behaviors that are detrimental to their health, or to increase people’s general well-being. We have worked with smokers to rethink the concept of the “mobile health app” analyzing what it can and cannot do to help people quit smoking. Through field studies with users, we discovered the idea of combining the convenience and just-in-place attributes of the mobile phone in the daily activities of a person who is trying to quit smoking, with the efficacy of personalized counseling. Taking advantage of the smartphones abilities to automatically register certain characteristics of context, coupled with simple user reporting on smoking events, we were able to create an app that reportedly helped people in their attempt to stop smoking. I have also been involved in presenting work with technology supporting people with dementia. Using Internet of Things technologies, including sensors embedded in everyday objects and identifying tags carried by residents, a system was designed to deliver relevant snippets of information to a carer’s smart watch. The information linked a resident to a nearby object that was meaningful to them, making it easy for carers to start a relevant and meaningful conversation involving the patient’s memories. We have also studied the elderly and creativity, doing field studies with MakeyMakey toolkits in their homes, inviting groups of older adults to explore technology and propose novel ideas of possible future applications for their everyday lives.